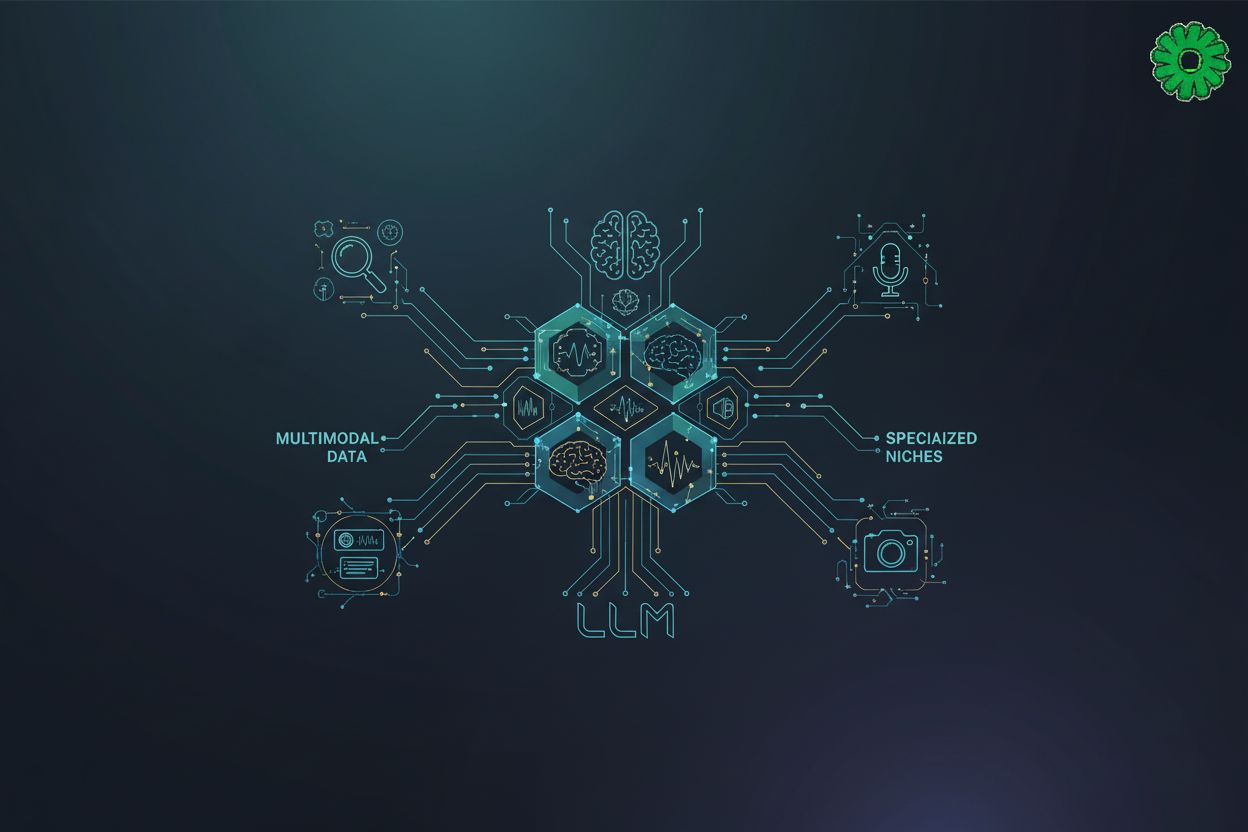

Multimodal LLMs for specialized niche content generation

TL;DR

The shift from text only to multimodal llms

Ever felt like ai was just a glorified typewriter? For years, we've been stuck in this "text-in, text-out" loop, but honestly, the walls are finally coming down.

According to Sam mokhtari on Medium, multimodal llms (mllms) are the next big leap because they don't just read—they actually "see" images and "hear" audio. This is huge for niche creators.

- Beyond just words: Classic models only liked text, but now they process video and images too.

- Human-like context: They understand the world more like we do, which is great for complex niches like healthcare or retail.

- Creative freedom: You can generate a story just by showing the api a photo.

In practice, a doctor could feed an MRI scan into an mllm to get a summarized report instantly. (New AI tool helps patients decode their radiology reports) It's not just about speed; it's about the model actually "seeing" the data.

A quick heads up though: in high-stakes fields like medicine, you can't just trust the robot blindly. Because of strict rules like HIPAA and the fda, these tools need professional oversight. You still need a human doctor to sign off on anything the ai "sees" to make sure it's actually right.

Specialized applications in education and blogging

Ever felt like textbooks are just paper weights that don't talk back? Honestly, it's frustrating when a diagram makes zero sense and there is no one around to explain it.

Multimodal llms are changing that by letting content actually "speak" to students. Instead of just reading about a physics engine, a student can show the ai a screenshot of their broken code and get a visual walkthrough of the fix.

- Interactive Textbooks: mllms can turn a flat image of a cell into an animated explanation that responds to voice questions. (Unveiling the Ignorance of MLLMs: Seeing Clearly, Answering ...)

- Inclusive Learning: For students who struggle with text, these models can instantly turn a chapter into narrated diagrams or interactive video lessons where the ai explains the visuals in real-time.

- Niche Clarity: Complex subjects like organic chemistry become way easier when the api generates 3D-like visuals on the fly to explain molecular bonds. (Designing Three-Dimensional Models That Can Be Printed on ...)

As mentioned earlier, mllms make learning way more engaging because they "see" what the student is looking at.

For us bloggers, the "blank page" is the worst. But now, you can just snap a photo of a new gadget or a retail storefront and tell the ai, "write a 500-word review based on this." It’s not just about speed; it’s about keeping that niche voice consistent.

"MLLMs unlock a whole new world of creative possibilities... they can generate stories based on images," as previously discussed in the shift toward multimodal tech.

If you’re repurposing content, it’s a lifesaver. You can feed a webinar video into the model and it'll spit out a polished blog post with perfectly placed image captions. It basically handles the boring paraphrasing so you can focus on the big ideas.

Ensuring content authenticity and humanized output

Ever felt like ai content sounds like a bored robot reading a phone book? Honestly, it’s the biggest vibe killer when you’re trying to build trust with your audience.

When we use these mllms for niche stuff—like a medical blog or a school lesson—authenticity isn't just a "nice to have," it's everything. If the output feels fake, people check out instantly.

- Human-in-the-loop: This is the most important part. Never just copy-paste. A teacher should always tweak ai-generated physics experiments to make sure they're safe and actually make sense.

- Fact-checking: mllms can "hallucinate" visuals too. If an api generates a chart for a finance report, you gotta double-check those numbers against real data.

- Voice consistency: Use your own slang or specific industry jargon that an ai might miss.

To keep things honest, some creators use tools like GPTZero. It’s not just about catching "cheaters," but more about maintaining a human-standard check to ensure the content hasn't lost its soul or accuracy during the multimodal process.

I've seen bloggers get burned because they didn't check a "visual summary" that totally misrepresented a product. It's about using the tech as a co-pilot, not the captain.

Now, let's finally look at the actual nuts and bolts of how these models are trained to be so smart.

The technical side of multimodal architecture

Ever wondered why your ai can suddenly "see" that messy retail shelf or a blurry medical scan? Honestly, it feels like magic, but it's just some clever plumbing under the hood.

- Modality Encoders: These are like translators. They take raw images or audio and turn them into embeddings. Think of embeddings as mathematical representations of data—basically a bunch of numbers that let the ai understand the relationships between different types of input, like how the word "apple" relates to a picture of a red fruit.

- Input Projector: This is the secret sauce. It aligns the visual data into the same mathematical "space" as the text data. By doing this, it ensures the image numbers and text numbers can talk to each other so the llm can process them both at the same time.

- LLM Backbone: As noted earlier by mokhtari, this is the main brain that does the heavy lifting and reasoning.

In healthcare, this lets a model "read" an X-ray alongside a patient's chart because the projector has put them in a language the brain understands. Pretty wild.

Future outlook for niche content creators

So, where do we go from here? Honestly, the "text-only" era already feels like a dusty old library. We’re moving toward a world where your ai doesn't just read your prompt but actually understands the 3D space around you.

As noted earlier by mokhtari, the next leap involves adding even more senses to the mix. We aren't just talking about photos anymore; we're looking at a future with:

- 3D and VR Integration: Imagine a retail designer feeding a virtual store layout into an api to get instant heatmaps on customer flow.

- Sensor Data: In finance or logistics, models will "feel" real-time sensor feeds to predict market crashes or shipping delays before they happen.

- Self-Supervised Learning: This is huge because models will start learning from raw video and audio without humans needing to tag every single thing.

Don't let the tech scare you off. Whether you're a teacher making interactive lessons or a blogger trying to stay relevant, mllms are just better tools for your belt. Just remember to keep that human touch—as mentioned earlier, staying honest and keeping a human-in-the-loop is the only way to win. The future is messy, but it’s gonna be a lot of fun.