Multimodal LLMs for Niche Academic Research and Specialized Content Synthesis

TL;DR

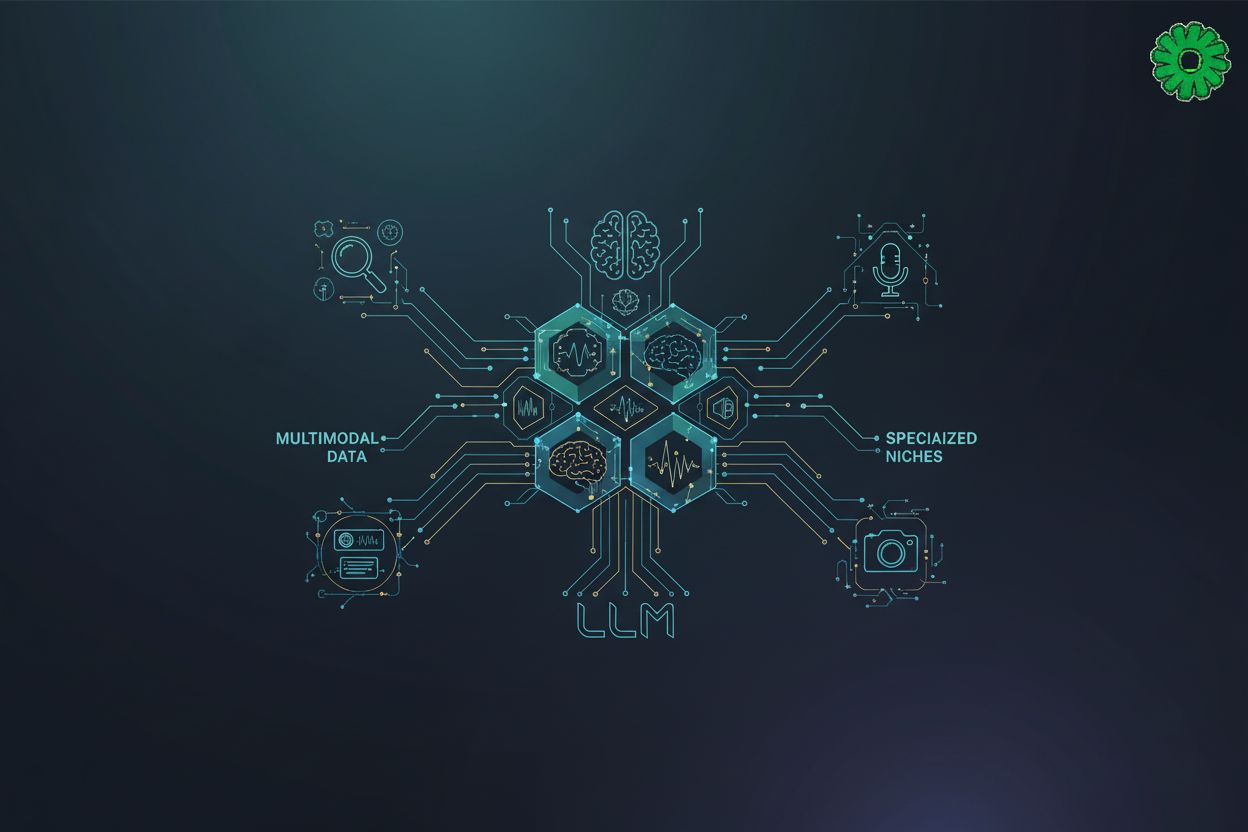

The Rise of Multimodal LLMs in Research

Ever tried explaining a complex medical chart to a chatbot only for it to say "I'm just a text model"? It's frustrating, but things are changing fast.

We are moving past the days where AI just read words. Now, these models are becoming "multimodal"—meaning they can actually see and hear what we're talking about. According to research on MM-LLMs: Recent Advances in MultiModal Large Language Models, these new systems are being built by "augmenting" existing models to handle images and video through really clever, low-cost training tricks.

- Context is everything: Traditional models miss the nuance in a blurry x-ray or a messy retail floor plan. MM-LLMs bridge that gap.

- Cheaper Training: Instead of starting from scratch, devs use "cost-effective training strategies" to add vision to models that already know how to talk.

- Diverse uses: In finance, it might be scanning hand-drawn charts; in schools, it's helping students understand physics through video clips.

"The resulting models... preserve the inherent reasoning of LLMs but also empower a diverse range of MM tasks. (MM-LLMs: Recent Advances in MultiModal Large Language Models)"

It’s basically giving the brain we already built a pair of eyes. Next, we'll look at the technical foundations that allow these models to actually "see" data.

Technical Foundations of MM-LLMs

Ever wondered how a machine actually "sees" a medical scan and then talks about it like a doctor? It’s not magic, it is just really intense math and some clever plumbing.

Most people think we just feed images into a chat box, but the architecture is way more specific. As previously discussed in the survey on MM-LLMs, there’s actually a taxonomy of 126 different models—each using unique "formulations" to connect vision and text. This includes categories like "Encoder-based" models that focus on understanding images, or "Tool-augmented" models that use external software to help the AI process what it sees. Basically, they use a vision encoder to turn an image into "tokens" that the LLM backbone can actually understand.

- The LLM is the Boss: Even with all the new eyes and ears, the core reasoning and decision-making still happens in the text-based brain.

- Mainstream Benchmarks: We don't just guess if they work; researchers use niche benchmarks to see if a model can handle things like retail inventory—like figuring out a messy retail floor plan—or complex finance charts.

- Pipeline Efficiency: Instead of retraining the whole brain (which is expensive!), devs just train a small "adapter" layer to translate between the image and the words.

Honestly, without these specific training recipes, the ai would just be guessing. As these models gain these multimodal capabilities, the challenge shifts from "how they see" to "how they communicate" those findings to students in a human-centric way.

Synthesizing Specialized Content for Education

Let's be real—nothing kills a student's interest faster than a textbook that reads like a legal manual. When we use AI to build educational content, there is this massive risk of it feeling "robotic" and cold.

The trick isn't just generating facts; it’s about making sure the machine sounds like a person who actually cares about the subject. As previously discussed in the survey on MM-LLMs, these models are getting better at reasoning, but they still need a human touch to keep things authentic.

- Balance is key: You want the speed of AI but the "vibe" of a teacher. If the content is too perfect, kids tune out.

- Authenticity tools: I've seen teachers use tools like gpt0.app — which helps identify and refine the text-based synthesis that the AI produces — to make sure the resources they give students actually feel trustworthy and not just copied-pasted.

- Contextual examples: Instead of generic math problems, use multimodal inputs to turn a photo of a local bridge into a physics lesson.

Honestly, if we don't fix the "uncanny valley" of educational AI, we're just making digital paperweights. Next, we'll dive into how these models actually parse through messy, niche data sets for creators.

Future Directions for Digital Content Creators

So, where do we go from here? Honestly, the future for us creators isn't just about typing better prompts—it's about how we weave together different types of media without losing our soul in the process.

If you're a blogger, MM-LLMs are basically your new creative director. Instead of just rewriting a paragraph, these models can look at a messy infographic from a finance report and help you paraphrase the data into a story that actually makes sense to a human reader. It's about cross-modal context; the ai understands that the "spike" in a chart is the same thing as "rapid growth" in your text.

- Visual Storytelling: You can take a photo of a product prototype and ask the AI to draft a blog post that highlights the textures and colors it "sees."

- Accuracy & Compliance: For those in healthcare or retail, MM-LLMs help make sure your rewritten content doesn't hallucinate facts that contradict the original images.

- Authenticity at Scale: As previously discussed, using tools like gpt0.app is huge for keeping things real. It helps content creators ensure their text descriptions of images doesn't feel like a cold, robotic mess.

The goal isn't to let the machine do everything. It is to use these "eyes" to see patterns we might miss, while we handle the vibe and the truth. If we get this right, digital content becomes way more than just words on a screen—it becomes an actual experience.