Hybrid Human-AI Collaborative Editing Patterns for Content Authority

TL;DR

The Rise of hybrid intelligence in Professional Writing

Ever wonder why some ai-generated content feels like a robotic manual while other stuff actually grabs you? It's usually because the person behind the screen knows how to dance with the machine instead of just letting it lead.

The old way was just hitting "generate" and hoping for the best, but that's basically just automation—it's fast but usually lacks any real soul. Modern workflows are shifting toward augmentation, where the ai handles the heavy lifting of data and drafting while the human provides the "taste" and direction.

To really win at this, you need "double literacy." This isn't just knowing how to code an api or write a prompt; it's understanding how your own brain works (human literacy) alongside how the algorithm thinks (algorithmic literacy). For example, a concrete human literacy skill is cognitive bias recognition—knowing when you're just agreeing with the bot because it's easier, rather than checking if the logic actually holds up.

Before we dive in, we gotta establish the ground rules. Hybrid intelligence only works if you have a foundation of ethics and transparency. You can't just outsource your conscience to a server in california. Authenticity runs on credibility; if you aren't transparent about where the data comes from, the whole thing falls apart.

- Healthcare efficiency: Doctors use assistants to scan millions of records in seconds, but the human expert still makes the final high-stakes call based on the patient's vitals.

- Finance and Fraud: Banks use algorithms to flag weird patterns, but human analysts are the ones who investigate the context to make sure a legit customer doesn't get their card blocked for no reason.

You can't just outsource ethics. Natural intelligence (ni) brings the "wicked problem" solving skills that machines just don't have yet. According to California Management Review, we have to diagnose if a problem is "familiar" or "wicked" before deciding how much to let the ai take over.

A 2025 report from the World Economic Forum suggests that while 85 million jobs might be displaced, 97 million new ones will pop up that focus on this human-machine split.

Next, we'll look at the specific patterns you need to diagnose these problems before you start typing.

Diagnostic Patterns for content authority

Ever tried to fix a "hallucinating" chatbot by just giving it more data, only to realize the problem wasn't the data, but how you framed the task? It's a classic mistake—treating every ai problem like a nail just because you have a shiny new hammer.

To actually get content authority, you have to stop thinking of ai as a magic box and start seeing it as a partner. The trick is knowing when to let the machine drive and when to grab the steering wheel before you hit a wall.

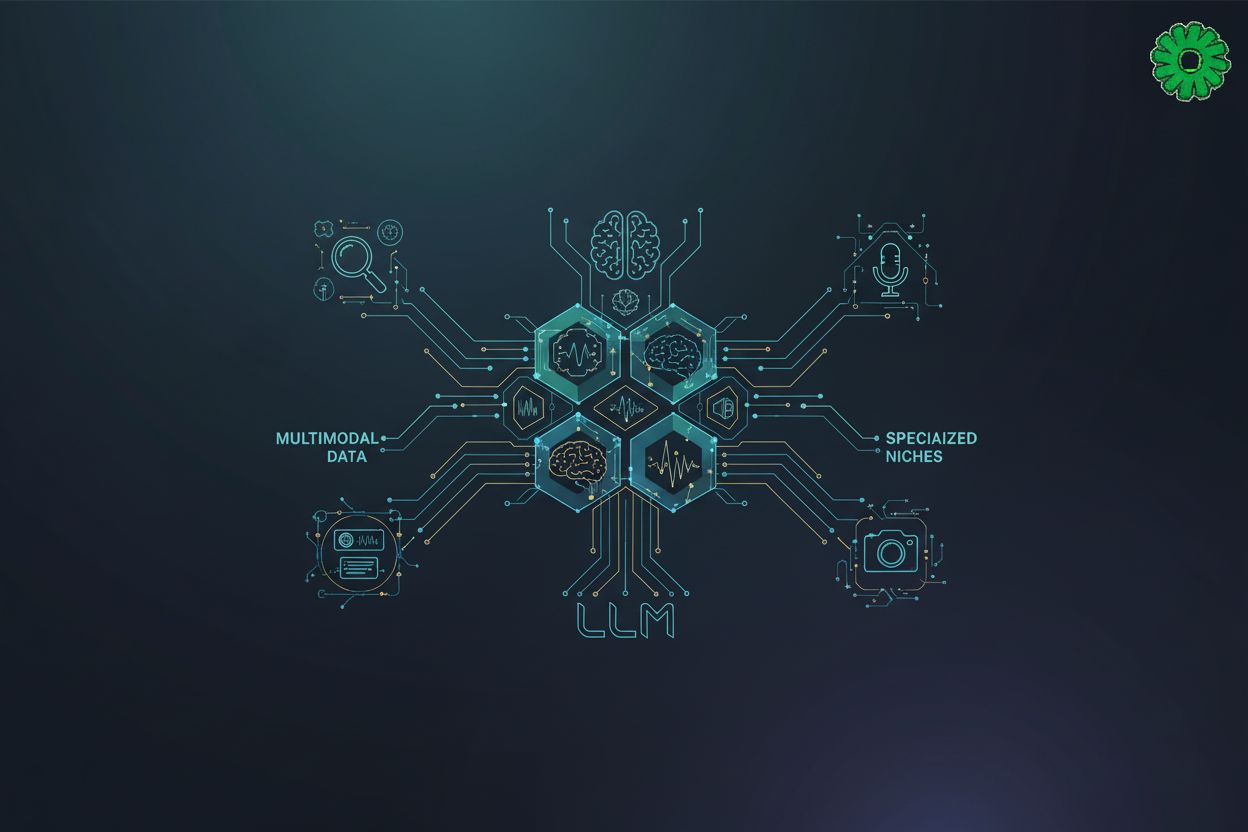

We need to figure out if a problem is "familiar" or "wicked." A 2025 insight from California Management Review introduces the Hybrid Diagnostic Cube, which helps you map out three big things: familiarity, complexity, and "wickedness."

- Familiarity: Have we solved this a thousand times? (like formatting a bibliography).

- Complexity: Are there a million moving parts? (like syncing global supply chain updates).

- Wickedness: Does the "right" answer depend on values or ethics? (like writing a sensitive medical disclosure).

If you’re dealing with a "wicked" problem, just hitting 'generate' is a recipe for disaster. You need a different mode of collaboration.

There's basically four ways to play this:

- Automated Execution: Use this for the boring, repeatable stuff. Think: converting markdown to html.

- Machine-Augmentation: This is where the ai helps you see things you missed.

- Human-in-the-Loop: The ai does the heavy lifting, but you're the editor-in-chief. You're correcting the "hallucinations."

- Expert Judgment: For high-stakes ethics. Here, the ai is just a research assistant, and the human "ni" does the real thinking.

A 2025 report by TechClass notes that companies who focus on augmenting humans—rather than just automating them—actually outperform the competition by 3x.

Honestly, the goal isn't to work less, it's to work on the stuff that actually matters. Don't let the bot handle your ethics.

The "Missing Middle" Roles

As we shift into these new workflows, we're seeing the rise of a "missing middle" in roles. These aren't just prompt engineers; they are the bridge between human intent and machine output.

- AI Trainers: These folks teach the bot how to not sound like a jerk. They feed it high-quality data and refine the "personality" of the model so it aligns with brand values.

- Explainers: They translate "black box" decisions for regular people. If an ai denies a loan or flags a medical record, the Explainer breaks down the "why" so humans can understand the logic.

- Sustainers: These are the guardians. They make sure the system stays ethical over time, checking for bias creep and ensuring the api doesn't start hallucinating after a new update.

Without these roles, your hybrid workflow is just a fancy way to make mistakes faster.

Practical Collaborative Editing Workflows

So, you've got your ai tools and your team of writers, but things still feel... clunky? It's usually because there's no clear "dance" between the human and the machine.

To fix this, we need to look at actual workflows. It's about finding that sweet spot where the algorithm does the heavy lifting and you stay the boss of the "soul" of the piece.

- The Draft Phase: Use the api to pull in raw data or summarize long reports. It's great at the "what," but it sucks at the "why it matters."

- The Soul Injection: This is where you come in. You add that personal story from last Tuesday or that weird metaphor that only a human would think of.

- The Audit Step: This is the most important part. You don't just proofread; you run a verification check. Does the data match the source? Is the tone consistent? This is where the Sustainer role usually lives, making sure the final output doesn't violate any ethical guardrails.

Sometimes you've already written the thing, but it feels... off. Here, the ai isn't the writer; it's the smartest editor in the room.

- Gap Identification: Feed your draft into the llm and ask it to find logical leaps.

- Humanizing the Bot: If the ai wrote a section that feels like a manual, you use "human literacy" to rewrite the cadence. Change the sentence lengths. Throw in a "honestly" or a "look."

Building Trust and Authenticity in digital spaces

Ever wonder why some "verified" articles still feel like they were written by a blender? It's usually a trust issue. Building actual authority in 2025 isn't about hiding the machine; it's about being honest about how you're using it.

- Reputation through Transparency: As we mentioned in the intro, authenticity runs on credibility. If you're a teacher or a publisher, showing your work—literally—builds more trust than any "verified" badge.

- Verification Stack: Use tools like GPTZero to check if your final polish actually feels human. It’s not about "catching" ai; it’s about making sure the machine didn't flatten your unique voice.

- Free Resources for all: For students and educators, access to these tools shouldn't be a luxury. Free detection and "humanizing" resources are essential today to keep the playing field level.

def check_content_integrity(text, human_score_threshold=0.7):

# pseudo-logic for an integrity gate

score = call_detection_api(text)

if score < human_score_threshold:

return "Needs more natural intelligence (ni) intervention"

return "Ready for publication"

A 2023 report from the Stanford HAI highlights that guardrails like regular bias audits and transparent data practices are critical for brand reputation.

Educational Resources and the Future of Learning

The shift in education isn't about the tech itself—it’s about how we stop being "content firehoses" and start becoming curators of human-machine collaboration.

The old model of a teacher dumping info into kids' heads is basically dead. Now, we’re moving toward a world where the educator acts more like a conductor. We need to lean into "double literacy"—teaching students to understand their own brains (like emotional resonance checking) alongside the way an algorithm processes data.

- From Provider to Curator: Teachers help students vet what the ai spits out to make sure it isn't just a "hallucination."

- Classroom Ethics: We need to talk about the ethical use of paraphrasing tools. If a student uses an api to clean up their grammar, is that cheating? Or is it just modern editing?

We're starting to see those "missing middle" roles pop up in schools. For example, an AI Explainer might help a student understand why a specific grading algorithm flagged their paper, while a Sustainer ensures the department's use of ai doesn't accidentally discriminate.

Overcoming the common pitfalls of ai adoption

Look, we all know the feeling—that slight pang of anxiety when a new tool starts doing 70% of your job. The real pitfall isn't the ai itself; it's how we let it sit in our org charts. If you treat it like a replacement, you lose your best people.

- Address the replacement myth: Companies focusing on augmenting humans actually see way better performance. It’s about making your team "super-powered."

- Stop the "black box" syndrome: When people don't understand why an ai made a choice, they don't trust it. You need those "explainer" roles to bridge the gap.

- Iterative feedback loops: Don't just ship it. You need a constant flow where humans check the machine.

There is a massive risk of over-reliance on algorithmic outputs. If you're solving a "wicked" problem (like a sensitive medical disclosure), the machine should be in the passenger seat, period.

In the end, the goal is to keep the "human in the loop" as a fail-safe. If you prioritize "algorithmic literacy" alongside your team's natural creativity, you won't just survive the ai shift—you'll actually lead it. By embracing the missing middle roles and focusing on augmentation over automation, we can build a future where technology makes us more human, not less. Stay human.